We're collecting (an admittedly opinionated) list of data annotated datasets in high quality. Most of the data sets listed below are free, however, some are not. They are classified by use case.

We're only at the beginning, and you can help by contributing to this GitHub!

If you're interested in this area and would like to hear more, join our Slack community (coming soon)! We'd also appreciate if you could fill out this short form (coming soon) to help us better understand what your interests might be.

If you have ideas on how we can make this repository better, feel free to submit an issue with suggestions.

We want this resource to grow with contributions from readers and data enthusiasts. If you'd like to make contributions to this Github repository, please read our contributing guidelines.

- M-AILABS Speech Dataset Include 1000 hours of audio plus transcriptions. It includes multiple languages arranged by male voices, female voices, and a mix of the two. Most of the data is based on LibriVox and Project Gutenberg.

- CREMA-D is a dataset of 7,442 original clips from 91 actors. These clips were from 48 male and 43 female actors between the ages of 20 and 74 coming from various ethnicities.

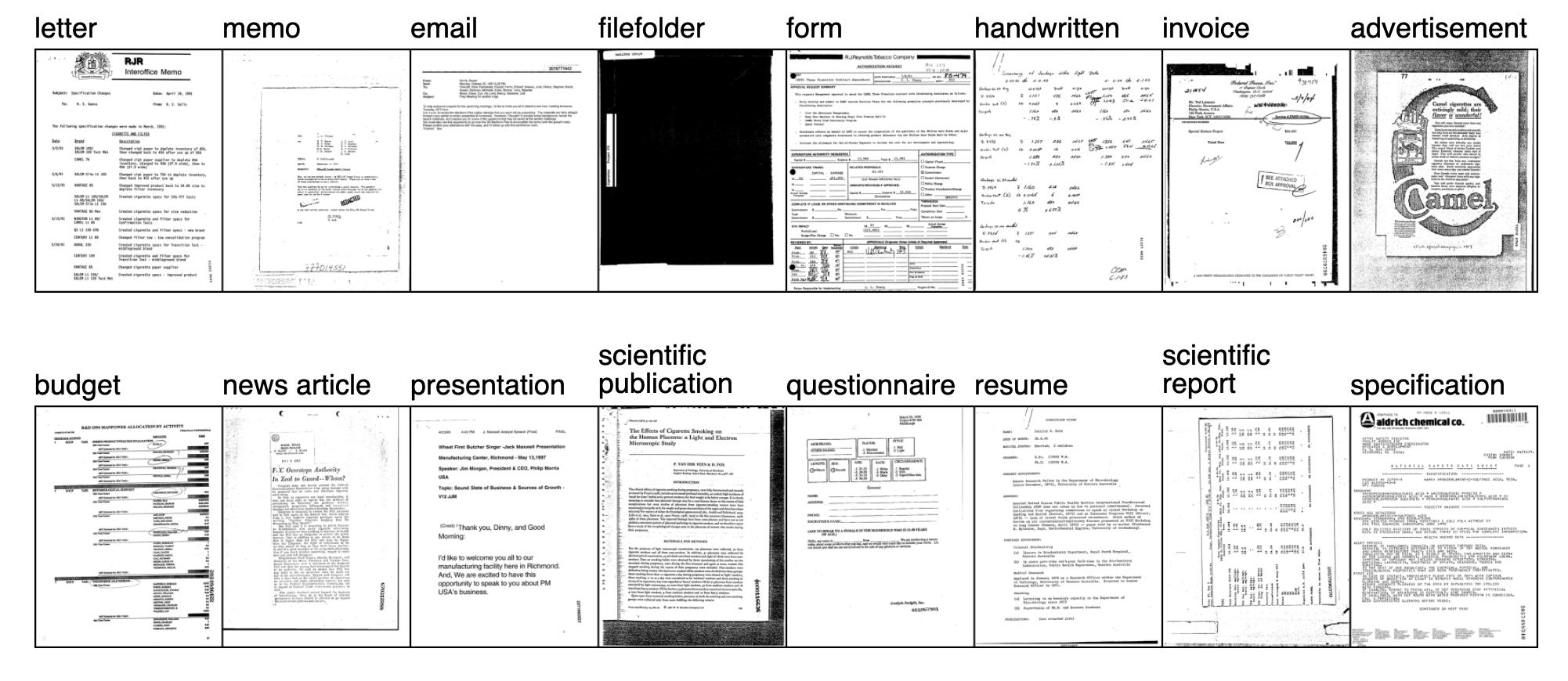

Documents are an essential part of many businesses in many fields such as law, finance and technology, among others. Automatic processing of documents such as invoices, contracts and resumes is lucrative and opens up many new business avenues. The fields of natural language processing and computer vision have seen considerable progress with the development of deep learning, so these methods have begun to be incorporated into contemporary document understanding systems.

Here is a curated list of datasets for intelligent document processing.

-

GHEGA contains two groups of documents: 110 data-sheets of electronic components and 136 patents. Each group is further divided in classes: data-sheets classes share the component type and producer; patents classes share the patent source.

-

RVL-CDIP Dataset The RVL-CDIP dataset consists of 400,000 grayscale images in 16 classes (letter, memo, email, file folder, form, handwritten, invoice, advertisement, budget, news article, presentation, scientific publication, questionnaire, resume, scientific report, specification), with 25,000 images per class.

-

Top Streamers on Twitch contains data of Top 1000 Streamers from past year. This data consists of different things like number of viewers, number of active viewers, followers gained and many other relevant columns regarding a particular streamer. It has 11 different columns with all the necessary information that is needed.

-

CORD The dataset consists of thousands of Indonesian receipts, which contains images and box/text annotations for OCR, and multi-level semantic labels for parsing. Labels are the bouding box position and the text of the key informations.

-

FUNSD A dataset for Text Detection, Optical Character Recognition, Spatial Layout Analysis and Form Understanding. Its consists of 199 fully annotated forms, 31485 words, 9707 semantic entities, 5304 relations.

-

The Kleister NDA dataset has 540 Non-disclosure Agreements, with 3,229 unique pages and 2,160 entities to extract.

-

The Kleister Charity dataset consists of 2,788 annual financial reports of charity organizations, with 61,643 unique pages and 21,612 entities to extract.

-

NIST The NIST Structured Forms Database consists of 5,590 pages of binary, black-and-white images of synthesized documents: 900 simulated tax submissions, 5,590 images of completed structured form faces, 5,590 text files containing entry field answers.

-

SROIE Consists of a dataset with 1000 whole scanned receipt images and annotations for the competition on scanned receipts OCR and key information extraction (SROIE). Labels are the bouding box position and the text of the key informations.

-

GHEGA contains two groups of documents: 110 data-sheets of electronic components and 136 patents. Each group is further divided in classes: data-sheets classes share the component type and producer; patents classes share the patent source.

-

XFUND is a multilingual form understanding benchmark dataset that includes human-labeled forms with key-value pairs in 7 languages (Chinese, Japanese, Spanish, French, Italian, German, Portuguese).

-

FUNSD for Text Detection, Optical Character Recognition, Spatial Layout Analysis and Form Understanding. Its consists of 199 fully annotated forms, 31485 words, 9707 semantic entities, 5304 relations.

-

RDCL2019 contains scanned pages from contemporary magazines and technical articles.

-

SROIE consists of a dataset with 1000 whole scanned receipt images and annotations for the competition on scanned receipts OCR and key information extraction (SROIE). Labels are the bouding box position and the text of the key informations.

-

Synth90k consists of 9 million images covering 90k English words.

-

Total Text Dataset consists of 1555 images with more than 3 different text orientations: Horizontal, Multi-Oriented, and Curved, one of a kind.

-

DocBank includes 500K document pages, with 12 types of semantic units: abstract, author, caption, date, equation, figure, footer, list, paragraph, reference, section, table, title.

-

Layout Analysis Dataset contains realistic documents with a wide variety of layouts, reflecting the various challenges in layout analysis. Particular emphasis is placed on magazines and technical/scientific publications which are likely to be the focus of digitisation efforts.

-

PubLayNet is a large dataset of document images, of which the layout is annotated with both bounding boxes and polygonal segmentations.

-

TableBank is a new image-based table detection and recognition dataset built with novel weak supervision from Word and Latex documents on the internet, contains 417K high-quality labeled tables.

-

HJDataset contains over 250,000 layout element annotations of seven types. In addition to bounding boxes and masks of the content regions, it also includes the hierarchical structures and reading orders for layout elements for advanced analysis.

- AmbigQA is inherent to open-domain question answering; especially when exploring new topics, it can be difficult to ask questions that have a single, unambiguous answer. AmbigQA is a new open-domain question answering task which involves predicting a set of question-answer pairs, where every plausible answer is paired with a disambiguated rewrite of the original question.

- Break is a question understanding dataset, aimed at training models to reason over complex questions.

- chatterbot/english contains wide variety of topics to train your model with . The bot will get info about various fields. Though you need huge dataset to create a fully fledged bot but it is suitable for starters

- Coached Conversational Preference Elicitation with 12,000 annotated statements between a user and a wizard discussing natural language movie preferences. The data were collected using the Oz Assistant method between two paid workers, one of whom acts as an "assistant" and the other as a "user".

- ConvAI2 dataset The dataset contains more than 2000 dialogs for a PersonaChat contest, where human evaluators recruited through the Yandex.Toloka crowdsourcing platform chatted with bots submitted by teams.

- Customer Support on Twitter This Kaggle dataset includes more than 3 million tweets and responses from leading brands on Twitter.

-

DocVQA contains 50 K questions and 12K Images in the dataset. Images are collected from UCSF Industry Documents Library. Questions and answers are manually annotated.

- DuReader 2.0 is a large-scale, open-domain Chinese data set for reading comprehension (RK) and question answering (QA). It contains over 300K questions, 1.4M obvious documents and corresponding human-generated answers.

- HotpotQA is a set of question response data that includes natural multi-skip questions, with a strong emphasis on supporting facts to allow for more explicit question answering systems. The data set consists of 113,000 Wikipedia-based QA pairs.

- Maluuba goal-oriented dialogue is a set of open dialogue data where the conversation is aimed at accomplishing a task or making a decision - in particular, finding flights and a hotel. The data set contains complex conversations and decisions covering over 250 hotels, flights and destinations.

- Multi-Domain Wizard-of-Oz dataset (MultiWOZ) Is a comprehensive collection of written conversations covering multiple domains and topics. The dataset contains 10,000 dialogs and is at least an order of magnitude larger than any previous task-oriented annotated corpus.

- NarrativeQA is a data set constructed to encourage deeper understanding of language. This dataset involves reasoning about reading whole books or movie scripts. This dataset contains approximately 45,000 pairs of free text question-and-answer pairs.

- Natural Questions (NQ), a new large-scale corpus for training and evaluating open-ended question answering systems, and the first to replicate the end-to-end process in which people find answers to questions. NQ is a large corpus, consisting of 300,000 questions of natural origin, as well as human-annotated answers from Wikipedia pages, for use in training in quality assurance systems. In addition, we have included 16,000 examples where the answers (to the same questions) are provided by 5 different annotators, useful for evaluating the performance of the QA systems learned.

- OpenBookQA inspired by open-book exams to assess human understanding of a subject. The open book that accompanies our questions is a set of 1329 elementary level scientific facts. Approximately 6,000 questions focus on understanding these facts and applying them to new situations.

- QASC is a question-and-answer data set that focuses on sentence composition. It consists of 9,980 8-channel multiple-choice questions on elementary school science (8,134 train, 926 dev, 920 test), and is accompanied by a corpus of 17M sentences.

- QuAC is a data set for answering questions in context that contains 14K information-seeking QI dialogues (100K questions in total). Question Answering in Context is a dataset for modeling, understanding, and participating in information-seeking dialogues.

- RecipeQAis a set of data for multimodal understanding of recipes. It consists of more than 36,000 pairs of automatically generated questions and answers from approximately 20,000 unique recipes with step-by-step instructions and images. Each RecipeQA question involves multiple modalities such as titles, descriptions or images, and working towards an answer requires (i) a common understanding of images and text, (ii) capturing the temporal flow of events, and (iii) understanding procedural knowledge.

- Relational Strategies in Customer Service (RSiCS) Dataset A dataset of travel-related customer service data from four sources. Conversation logs from three commercial customer service VIAs and airline forums on TripAdvisor.com during the month of August 2016.

- Santa Barbara Corpus of Spoken American English This dataset contains approximately 249,000 words of transcription, audio and timestamp at the individual intonation units.

- SGD (Schema-Guided Dialogue) dataset containing over 16k of multi-domain conversations covering 16 domains.

- TREC QA Collection has had a track record of answering questions since 1999. In each track, the task was defined so that systems had to retrieve small fragments of text containing an answer to open-domain and closed-domain questions.

- The WikiQA corpus Is a set of publicly available pairs of questions and phrases collected and annotated for research on the answer to open-domain questions. In order to reflect the true information needs of general users, they used Bing query logs as a source of questions. Each question is linked to a Wikipedia page that potentially contains the answer.

- Ubuntu Dialogue Corpus Consists of nearly one million two-person conversations from Ubuntu discussion logs, used to receive technical support for various Ubuntu-related issues. The dataset contains 930,000 dialogs and over 100,000,000 words.

- EXCITEMENTS datasets is available in English and Italian and contain negative comments from customers giving reasons for their dissatisfaction with a given company.

- OPUS was created for the standardization and translation of social media texts. It is built by randomly selecting 2,000 messages from the NUS corpus of SMS in English and then translating them into formal Chinese.

- TyDi QA is a set of question response data covering 11 typologically diverse languages with 204K question-answer pairs.

-

A DATASET FOR DETECTING FLYING AIRPLANES ON SATELLITE IMAGES contains satellite images of areas of interest surrounding 30 different European airports. It also provides ground-truth annotations of flying airplanes in part of those images to support future research involving flying airplane detection. This dataset is part of the work entitled "Measuring economic activity from space: a case study using flying airplanes and COVID-19" published by the IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing.

-

Airbus Aircraft Detection can be used to detect the number, size and type of aircrafts present on an airport. In turn, this can provide information about the activity of any airport.

-

Highway Traffic Videos Dataset is a database of video of traffic on the highway used in [1] and [2]. The video was taken over two days from a stationary camera overlooking I-5 in Seattle, WA. The video were labeled manually as light, medium, and heavy traffic, which correspond respectively to free-flowing traffic, traffic at reduced speed, and stopped or very slow speed traffic.

-

iSAID contain 655 451 object instances from 15 categories across 2 806 high resolution images. iSAID uses pixel-level annotations. The object categories in iSAID include plane, ship, storage tank, baseball diamond, tennis court, basketball court, ground track field, harbor, bridge, large vehicle, small vehicle, helicopter, roundabout, soccer ball field and swimming pool. The images of ISAID are mainly collected from google earth

-

Traffic Analysis in Original Video Data of Ayalon Road Is compose of 81 (14 hours) videos recording the traffic in Ayalon Road. It can detect and track vehicle over consecutive frame.

-

casting product image data for quality inspection The dataset contains total 7348 image data. These all are the size of (300*300) pixels grey-scaled images. In all images, augmentation already applied.

-

DAGM 2007 is a synthetic dataset for defect detection on textured surfaces. It was originally created for a competition at the 2007 symposium of the DAGM

-

MVTec AD is a dataset for benchmarking anomaly detection methods with a focus on industrial inspection. It contains over 5000 high-resolution images divided into fifteen different object and texture categories. Each category comprises a set of defect-free training images and a test set of images with various kinds of defects as well as images without defects.

-

Oil Storage Tanks contains nearly 200 satellite images taken from Google Earth of tank-containing industrial areas around the world. Images are annotated with bounding box information for floating head tanks in the image. Fixed head tanks are not annotated.

-

Kolector surface is a dataset to detect steel defect.

-

Metadata - Aerial imagery object identification dataset for building and road detection, and building height estimation For 25 locations across 9 U.S. cities, this dataset provides (1) high-resolution aerial imagery; (2) annotations of over 40,000 building footprints (OSM shapefiles) as well as road polylines; and (3) topographical height data (LIDAR). This dataset can be used as ground truth to train computer vision and machine learning algorithms for object identification and analysis, in particular for building detection and height estimation, as well as road detection.

- BRATS2016 BRATS 2016 is a brain tumor segmentation dataset. It shares the same training set as BRATS 2015, which consists of 220 HHG and 54 LGG. Its testing dataset consists of 191 cases with unknown grades.

- CheXpert dataset contains 224,316 chest radiographs of 65,240 patients with both frontal and lateral views available. The task is to do automated chest x-ray interpretation, featuring uncertainty labels and radiologist-labeled reference standard evaluation sets.

- CT Medical Images is designed to allow for different methods to be tested for examining the trends in CT image data associated with using contrast and patient age. The data are a tiny subset of images from the cancer imaging archive. They consist of the middle slice of all CT images taken where valid age, modality, and contrast tags could be found. This results in 475 series from 69 different patients.

- Open Access Series of Imaging Studies (OASIS) is a retrospective compilation of data for >1000 participants that were collected across several ongoing projects through the WUSTL Knight ADRC over the course of 30 years. Participants include 609 cognitively normal adults and 489 individuals at various stages of cognitive decline ranging in age from 42-95yrs.

- TMED (Tufts Medical Echocardiogram Dataset) contains imagery from 2773 patients and supervised labels for two classification tasks from a small subset of 260 patients (because labels are difficult to acquire). All data is de-identified and approved for release by our IRB. Imagery comes from transthoracic echocardiograms acquired in the course of routine care consistent with American Society of Echocardiography (ASE) guidelines, all obtained from 2015-2020 at Tufts Medical Center.

Named-entity recognition (NER) (also known as (named) entity identification, entity chunking, and entity extraction) is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names, organizations, locations, medical codes, time expressions, quantities, monetary values, percentages, etc.

State-of-the-art NER systems for English produce near-human performance. For example, the best system entering MUC-7 scored 93.39% of F-measure while human annotators scored 97.60% and 96.95%.

-

CCCS-CIC-AndMal-2020 proposes a new comprehensive and huge android malware dataset, named CCCS-CIC-AndMal-2020. The dataset includes 200K benign and 200K malware samples totalling to 400K android apps with 14 prominent malware categories and 191 eminent malware families.

-

Malware consists of texts about malware. It was developed by researchers at the Singapore University of Technology and Design and DSO National Laboratories.

-

re3d focuses on entity and relationship extraction relevant to somebody operating in the role of a defence and security intelligence analyst.

-

SEC-filings is generated using CoNll2003 data and financial documents obtained from U.S. Security and Exchange Commission (SEC) filings.

-

AnEM consists of abstracts and full-text biomedical papers.

-

CADEC is a corpus of adverse drug event annotations.

-

i2b2-2006 is the Deidentification and Smoking Challenge dataset.

-

i2b2-2014 is the 2014 De-identification and Heart Disease Risk Factors Challenge.

-

CONLL 2003 is an annotated dataset for Named Entity Recognition. The tokens are labeled under one of the 9 possible tags.

-

MUC-6 contains the 318 annotated Wall Street Journal articles, the scoring software and the corresponding documentation used in the MUC6 evaluation.

-

MITMovie is a semantically tagged training and test corpus in BIO format.

-

MITRestaurant is a semantically tagged training and test corpus in BIO format.

- Enron Over half a million anonymized emails from over 100 users. It’s one of the few publically available collections of “real” emails available for study and training sets.

-

WNUT17 is the dataset for the WNUT 17 Emerging Entities task. It contains text from Twitter, Stack Overflow responses, YouTube comments, and Reddit comments.

-

Assembly is a dataset for Named Entity Recognition (NER) from assembly operations text manuals.

-

BTC is the Broad Twitter corpus, a dataset of tweets collected over stratified times, places and social uses.

-

Ritter is the same as the training portion of WNUT16 (though with sentences in a different order).

-

BBN contains approximately 24,000 pronoun coreferences as well as entity and numeric annotation for approximately 2,300 documents.

-

Groningen Meaning Bank (GMB) comprises thousands of texts in raw and tokenised format, tags for part of speech, named entities and lexical categories, and discourse representation structures compatible with first-order logic.

-

OntoNotes 5 is a large corpus comprising various genres of text (news, conversational telephone speech, weblogs, usenet newsgroups, broadcast, talk shows) in three languages (English, Chinese, and Arabic) with structural information (syntax and predicate argument structure) and shallow semantics (word sense linked to an ontology and coreference).

-

GUM-3.1.0 is the Georgetown University Multilayer Corpus.

-

wikigold is a manually annotated collection of Wikipedia text.

-

WikiNEuRal is a high-quality automatically-generated dataset for Multilingual Named Entity Recognition.

-

QUAERO French Medical Corpus has been initially developed as a resource for named entity recognition and normalization.

-

Europeana Newspapers (Dutch, French, German) is a Named Entity Recognition corpora for Dutch, French, German from Europeana Newspapers.

-

CAp 2017 - (Twitter data) concerns the problem of Named Entity Recognition (NER) for tweets written in French.

-

DBpedia abstract corpus contains a conversion of Wikipedia abstracts in seven languages (dutch, english, french, german, italian, japanese and spanish) into the NLP Interchange Format (NIF).

-

WikiNER is a multilingual named entity recognition dataset from Wikipedia.

-

WikiNEuRal is a high-quality automatically-generated dataset for Multilingual Named Entity Recognition.

Coming soon! 😘

.png)

.png)

Dataset.jpg)

.jpg)

.png)

.png)

.png)

.png)