This repository contains all the code to run the Cognitive AR end-to-end demo.

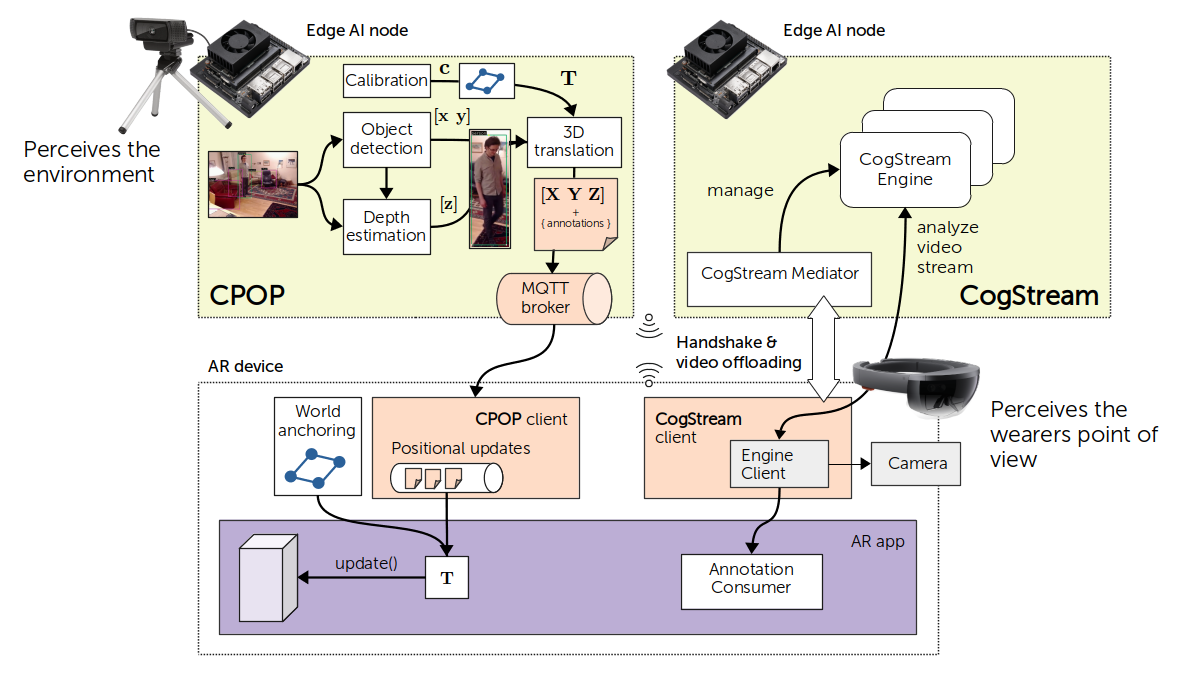

The following figure shows a rough overview of what we will set up:

The setup includes the following steps:

- Setting up the edge node, although you can also run it entirely on your computer

- Setting up CPOP

- Calibrating the camera parameters

- Anchoring the camera to the common origin

- Start the MQTT Broker

- Start the CPOP Server

- Setting up CogStream

- Setting up the HoloLens app

- Configuring IP addresses to components

- Building and deploying to the hololens

- Start the Platform

- Start the HoloLens App

CognitiveXR is built to run on GPU-accelerated edge computing hardware, such as NVIDIA Jetson devices. Our edge-node documentation gives instructions on how to build your own edge cluster.

If you want to run the platform on your computer, you need:

- Docker (for building and running the platform components)

- Python (for calibrating CPOP)

- A webcam (for CPOP)

- (optional) An Intel Realsense Camera (for depth estimation)

- (optional) An NVIDIA GPU with CUDA 11 and the NVIDIA Docker Toolkit

To calibrate the intrinsic parameters of the camera that will be used for CPOP, please follow these steps: https://github.com/cognitivexr/cpop#intrinsic-camera-parameters.

For this, you will need to run CPOP on your host machine or use X11 forwarding, as you require a working OpenCV UI to validate the camera calibration.

The generated files (e.g., default__intrinsic_1024x576.json) will be placed into ~/.cpop/cameras and copied into this repository to etc/cpop/cameras.

Here is a video showing the procedure. The more angles and good frames you capture, the better the result will be.

cpop-calibration.mp4

Once the intrinsic camera parameters are determined, we can now anchor the camera to a common origin. The exact steps are described here: https://github.com/cognitivexr/cpop#extrinsic-camera-parameters

First, place the camera where it should perceive the environment from.

Print the ArUco marker and put it on the ground where it is visible to the camera.

After anchoring, you should not move the camera. Otherwise, the coordinates sent to other devices will be wrong.

Use the python -m cpop.cli.anchor command to run the anchoring.

Copy the default__extrinsic.json file from ~/.cpop/cameras into etc/cpop/cameras.

Here is what the procedure looks like (marker is on the table for demonstration purposes).

cpop-anchoring.mp4

The camera is now calibrated and ready to be used in the CPOP server!

Either use docker-compose up, or start services individually.

The docker-compose.yml contains a basic docker-compose that starts up all system components.

The .env file contains relevant parameters.

The etc/ folder contains several configuration files that are mounted into the system components.

We currently don't host pre-built docker images, so you will have to build them yourself:

You can find build instructions for the docker images in the respective repositories.

In etc/engines/, you can find the engine specifications that describe how the mediator starts engines.

If you wish to use the CPU images for the fermx or yolov5 engine, you can change the tag from cuda-110 to cpu.

| Variable | Description |

|---|---|

| CPOP_CAMERA_DEVICE | The camera device of the host (e.g., /dev/video0 for the default camera) |

| CPOP_CAMERA_MODEL | The camera model (e.g., c920) to reference a particular set of camera parameters in etc/cpop/cameras |

You can use and start any MQTT-compliant broker. The MQTT broker port and host needs to be configured when

- starting CPOP (via the environment varibale

CPOP_BROKER_PORTandCPOP_BROKER_HOST). - building the HoloLens app: https://github.com/cognitivexr/cognitivexr-unity-client

See the CPOP documentation on how to run the CPOP service manually.

See the CogStream documentation on how to build and run the mediator.

Our Unity Demo App can be built for and deployed on the HoloLens 2. It contains two scenes that demonstrate the integration of the HoloLens with the CognitiveXR platform.

This app demonstrates the integration with CPOP. The AR device doesn't do any tracking, it only receives sensor data from the environment in real-time that CPOP collects.

cognitivexr-demo-tracking.mp4

Once the HoloLens app is deployed, you can select the fermx engine in the app. The HoloLens will connect to the mediator that creates a new analytics engine, which will then process the video feed.