Author: @yunzhusong, @theblackcat102, @redman0226, Huiao-Han Lu, Hong-Han Shuai

Code implementation for Character-Preserving Coherent Story Visualization

Objects in pictures should so be arranged as by their very position to tell their own story.

- Johann Wolfgang von Goethe (1749-1832)

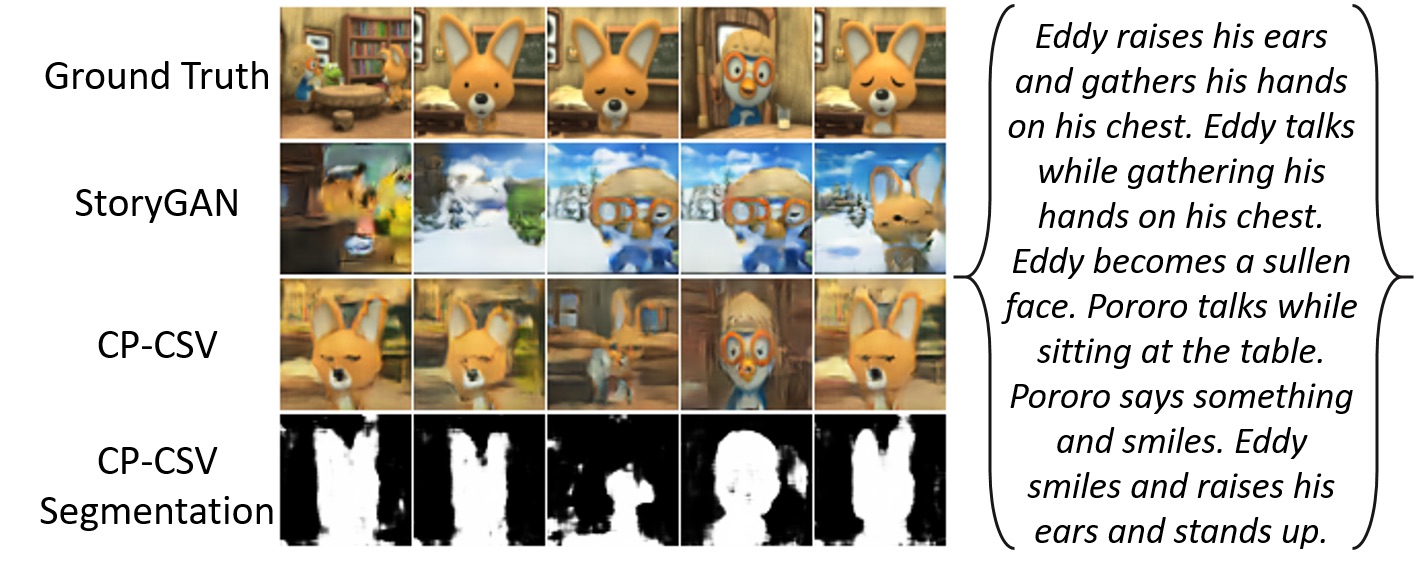

In this paper we propose a new framework named Character-Preserving Coherent Story Visualization (CP-CSV) to tackle the challenges in story visualization: generating a sequence of images that emphasizes preserving the global consistency of characters and scenes across different story pictures.

CP-CSV effectively learns to visualize the story by three critical modules: story and context encoder (story and sentence representation learning), figure-ground segmentation (auxiliary task to provide information for preserving character and story consistency), and figure-ground aware generation (image sequence generation by incorporating figure-ground information). Moreover, we propose a metric named Frechet Story Distance (FSD) to evaluate the performance of story visualization. Extensive experiments demonstrate that CP-CSV maintains the details of character information and achieves high consistency among different frames, while FSD better measures the performance of story visualization.

-

PORORO images and segmentation images can be downloaded here. Pororo, original pororo datasets with self labeled segmentation mask of the character.

-

CLEVR with segmentation mask, 13755 sequence of images, generate using Clevr-for-StoryGAN

images/

CLEVR_new_013754_1.png

CLEVR_new_013754_1_mask.png

CLEVR_new_013754_2.png

CLEVR_new_013754_2_mask.png

CLEVR_new_013754_3.png

CLEVR_new_013754_3_mask.png

CLEVR_new_013754_4.png

CLEVR_new_013754_4_mask.png

Download link

virtualenv -p python3 env

source env/bin/activate

pip install -r requirements.txt

-

Download the Pororo dataset and put at DATA_DIR, downloaded. The dataset should contain SceneDialogues/ ( where gif files reside ) and *.npy files.

-

Modify the DATA_DIR in ./cfg/final.yml

-

The dafault hyper-parameters in ./cfg/final.yml are set to reproduce the paper results. To train from scratch:

./script.sh

- To run the evaluation, specify the --cfg to ./output/yourmodelname/setting.yml, e.g.,:

./script_inference.sh

Pretrained model (final_model.zip) can be download here.

-

Download the Pororo dataset and put at DATA_DIR, downloaded. The dataset should contain SceneDialogues/ ( where gif files reside ) and *.npy files.

-

Download the pretrained model, download, and put in the ./output directory.

-

Modify the DATA_DIR in ./cfg/final.yml

-

To evaluate the pretrained model:

./script_inference.sh

Use the tensorboard to check the results.

tensorboard --logdir output/ --host 0.0.0.0 --port 6009

The slide and the presentation video can be found in slides.

@inproceedings{song2020CPCSV,

title={Character-Preserving Coherent Story Visualization},

author={Song, Yun-Zhu and Tam, Zhi-Rui and Chen, Hung-Jen and Lu, Huiao-Han and Shuai, Hong-Han},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV)},

year={2020}

}